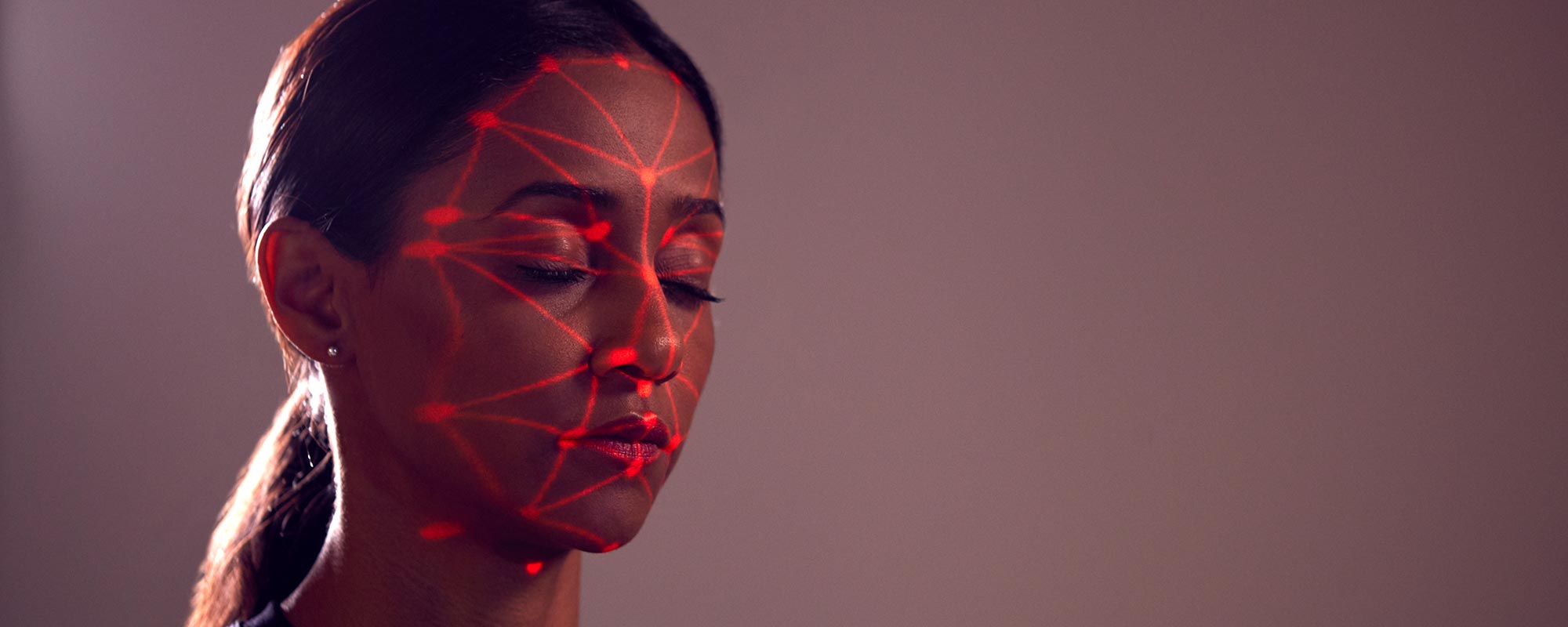

Clearview AI broke Canadian law when it scraped the internet for 3 billion photos of people, including possibly millions of Canadians, created biometric identifiers from those photos, and sold their facial recognition tool to police forces across Canada. It was mass surveillance. It was illegal. These are the findings of the joint investigation by the federal, BC, Alberta and Quebec Privacy Commissioners, released February 3rd, 2021. And police forces across the country used Clearview facial recognition tools, without due diligence or public transparency.

Today’s investigation report is damning and explicit: Clearview AI is at fault for flogging a tool that flagrantly violates Canadian law. In part this hinges on the concept of meaningful and informed consent, a principle at the heart of our law. Scraping images, contrary to the terms of service of many of the platforms, and using them for a purpose that individuals posting a pic for Grandma could never imagine, subverts consent. It is not the case that all information that is posted online is free and fair game for secondary uses. When people post information, they do so with a reasonable expectation that platform rules will be followed and their information will be used for those purposes they’ve agreed to, not scooped up by random third parties for completely different purposes. The report clearly states, “information collected from public websites, such as social media or professional profiles, and then used for an unrelated purpose, does not fall under the “publicly available” exception of PIPEDA, PIPA AB or PIPA BC. Nor is this information “public by law” , which would exempt it from Quebec’s Private Sector Law…”. In other words, every private sector privacy law in Canada would require consent, and Clearview didn’t have it.

The other key finding is that Clearview used personal information of people in Canada (including children) for inappropriate purposes, which means that even if they had gotten consent (and they didn’t) the use would still be illegal. The Commissioners said that “the mass collection of images…represents the mass identification and surveillance of individuals by a private entity in the course of commercial activity” for purposes which “will often be to the detriment of the individual whose images are captured” and “create significant risk of harm to those individuals, the vast majority of whom have never been and will never be implicated in a crime.”

The Commissioners recommended Clearview cease offering their facial recognition tool in Canada; stop collecting, using or disclosing images and biometric facial arrays from individuals in Canada; and delete images and facial arrays of such individuals. Clearview expressly disagreed with the findings and has not committed to following recommendations although they announced they were withdrawing from the Canadian market before this report was issued.

The Commissioner’s investigation focused on Clearview and its culpability. But it must be noted that police forces across Canada are also at fault, for embracing the tool without doing assessments of its legality, and for misleading the public about it. When the New York Times broke the story about Clearview AI’s business practices in January 2020, many police forces asked by Canadian media if they were using it said no. When CCLA filed a series of access to information requests to check on those claims, we were beginning to get responses that there were “no responsive records” at about the same time as there was a security breach at Clearview. Their client list leaked, proving that the company had a number of Canadian clients in Nova Scotia, Alberta and Ontario. The Toronto Police Service, the OPP and the RCMP are among those who used it. Some forces claimed it was individual officers or units who experimented with trial versions of the software distributed at conferences, unknown to Chiefs of Police who had originally issued statements that the technology had not been procured or used.

This is not, therefore, just a story about a bad technology actor, but also about a crisis of accountability with regards to police use of a controversial, inherently invasive surveillance technology. While the online world is under-regulated and our laws are out of date when faced with the potential of emerging technology, we do have privacy laws that govern the terms and nature of consent for uses of our personal information. Clearview’s application is not operating in an entirely lawless world, just one in which the law seems to be being ignored, by the company and by the law enforcement agencies who used the product.

As I wrote when the story broke, this brings all of the social debate about facial recognition—should it be banned, are there ever cases where benefits outweigh the risks of its use, how can it be regulated (or simply, can it be effectively regulated)—into clear and urgent focus. Not only does facial recognition facilitate a form of mass surveillance that is profoundly dangerous to human rights in our democracy, but it is a fundamentally flawed technology. Larger, more responsible companies have been afraid to set this tech loose into the world for that reason; indeed, Amazon, IBM and Microsoft have stopped selling their facial recognition tools to police, recognizing they are too prone to facilitating discrimination given their inaccuracy on faces that are Black, Brown, Indigenous, female, young, that is, not male and white. Before the pandemic hit, the parliamentary Standing Committee on Access to Information, Privacy and Ethics planned a study of facial recognition technology, and that would be a good start to wider, deeper consideration of the many issues it raises.

Because the investigation report, published today, makes one thing clear. It must be a priority to begin the hard but necessary public conversations that ask first if there are contexts in which this technology is appropriate for use in a democracy that values freedom from routine private sector or state scrutiny as we move about our public streets and transact business in private spaces.

A similarly urgent set of conversations is needed about the kinds of accountability and transparency the public deserves and demands in return from police who wish to use increasingly powerful and invasive surveillance technologies. The social license to exercise the powers we grant our law enforcement bodies can only exist in a trust relationship, and before we even get to the question of whether or not there is social benefit in allowing police to use facial recognition technology and if so, whether any benefit outweighs the social risks, we need assurance that our law enforcement bodies are committed to using tools that are lawfully conceived and lawfully implemented.

The Clearview AI debacle and the report that lays it bare provides us with an important reminder of all that we have to lose if we fail to engage with the risks of new technology as well as being open to its benefits. CCLA reiterates our call for a moratorium on facial recognition software until Canada has had a chance, as a nation, to discuss, debate, and dispute first if, then, only if we get past that question, when and how, this technology should be used in a rights-respecting democracy.