There is a lot of outrage, blame, and more than a few “I told you so’s” circulating this week about Facebook. News that Cambridge Analytica acquired and used data on 50 million Facebook users to deliberately manipulate millions more has people angry and afraid.

What happened? A researcher, Aleksandr Kogan, paid some users a small fee to download an app and take a personality quiz which he told them was for research purposes. The app scraped information from those users’ Facebook profiles, and data from all of their friends as well. It was all part of project to develop psychographic profiles about people–profiles they hoped would reveal more about a person than their parents or romantic partners knew. Kogan sold the data to Cambridge Analytica (which broke Facebook’s rules) and they used it to develop techniques to influence voters.

But in all the anger and angst, we’re not asking the right questions about this scandal. The question isn’t how could this happen, but rather, why do we continue to support business models for data collection and use that allow it to happen?

Make no mistake, Facebook followed their own policies. The creator of the app that was designed to collect user’s data, along with that of all of their friends, followed the policy in place at the time too, at least up to the point he sold it to a third party (and to be fair, the policy about collection “friends” data changed in 2015). Facebook’s initial approach was to defend themselves by saying no systems were hacked and no information was stolen, but it’s arguable that only makes things worse—this happened because it was allowed to happen, on purpose.

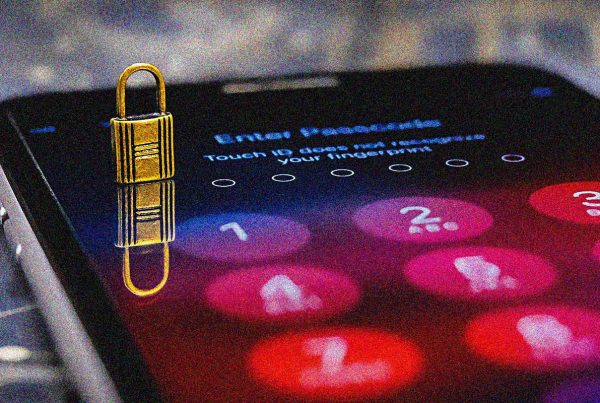

It’s time to ask whether we as a society are willing to tolerate those policies that allow our data to be collected in bulk, shared or sold, often without consent and certainly without informed consent, because we’ve clicked “I agree” to a take-it-or-leave-it button on a terms of service agreement.

It’s time to recognise big data, and data-driven profiling and decision-making, for the civil liberties issues that they are. When data is collected and used to make decisions about us, market products or even politicians to us, predict our behavior and try to manipulate us, it not only erodes our privacy but puts our free speech at risk, and because of the ways Information gets grouped, often subjects us (or others) to discrimination.

Canada’s Privacy Commissioner is investigating whether Canadian data was included in the 50 million accounts. Canadian politicians are asking Facebook directly whether personal information of Canadians has been compromised.

But whether it was or not, this time, next time it may well be if we don’t do a better job of holding companies to account for the ways they set data policies that put private interests above privacy, and the ways they fail to adhere to Canadian privacy laws. We also need to take a good hard look at updating those laws, a move that CCLA and others have called for repeatedly, that has been the subject of studies, papers, and consultations, but has yet to see positive action.

The Cambridge Analytica/Facebook story is a harsh wake up call that reminds us the question when it comes to big data applications cannot always be “what can we do with this powerful technology” but rather must be “is this a good use of a powerful technology?” Let’s start asking the right questions.

About the Canadian Civil Liberties Association

The CCLA is an independent, non-profit organization with supporters from across the country. Founded in 1964, the CCLA is a national human rights organization committed to defending the rights, dignity, safety, and freedoms of all people in Canada.

For the Media

For further comments, please contact us at media@ccla.org.